When are We Going to Start to Pay More Attention to Nvidia? Probably Now.

This month, Nvidia announces an open beta for Omniverse, a 3D simulation and collaboration platform — and Maxine, a cloud-native streaming video AI platform. It’s now a phygital world — and as the physical and digital worlds fuse to simulate reality in real time and with pixel perfect detail, the company that AV should pay more attention to is Nvidia.

Omniverse, the world’s first NVIDIA RTX-based 3D simulation and collaboration platform, merges Nvidia’s tech know-how in graphics, simulation and AI.

“Physical and virtual worlds will increasingly be fused,” said Jensen Huang, founder and CEO of NVIDIA. “ … This is the beginning of the Star Trek Holodeck, realized at last.”

Using the platform, remote teams can collaborate simultaneously on projects — such as architects iterating on 3D building design, creators of media and entertainment content, animators revising 3D scenes, and engineers collaborating on design — as readily as they would jointly edit a document online.

Nvidia’s open beta follows a year of early access in which Pixar, Ericsson, Woods Bagot, Foster + Partners, ILM (Lucasfilm) and 40+ other companies (as many as 400 individual creators and developers) have been providing feedback to the NVIDIA engineering team.

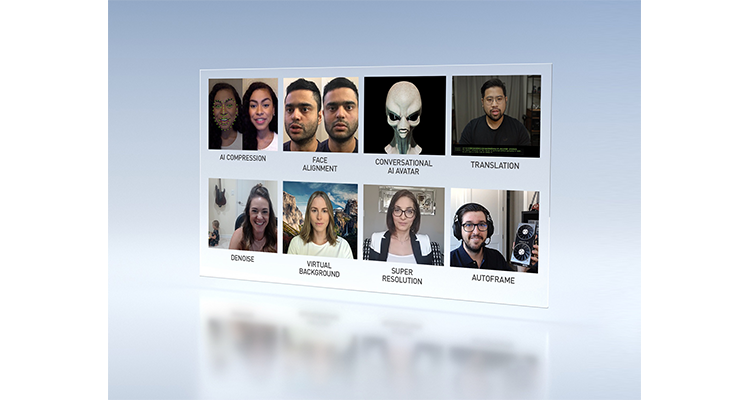

NVIDIA also launched its NVIDIA Maxine platform, a cloud-based suite of GPU-accelerated AI videoconferencing software to enhance streaming video — the internet’s No. 1 source of traffic. Videoconference service providers running the platform on NVIDIA GPUs in the cloud can now offer users new AI effects — including gaze correction, super-resolution, noise cancellation, face relighting and more.

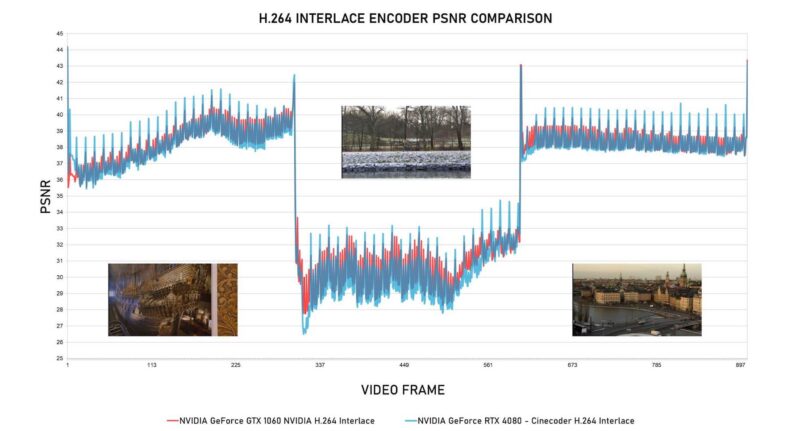

Data is processed in the cloud (rather than on local devices) so end users can enjoy the new features without any specialized hardware. Using a new AI-based video compression technology, the Maxine platform dramatically reduces the bandwidth required for video calls (to one-tenth of the H.264 streaming standard). Instead of streaming an entire screen of pixels, AI software analyzes the key facial points of each person on a call — then intelligently re-animates the face in the video on the other side.

Videoconference service providers will also be able to take advantage of NVIDIA research in GANs (generative adversarial networks) to offer a variety of new features.

- Faces on a call can be automatically adjusted so people appear to be facing each other during a call, while at the same time Nvidia gaze correction helps simulate eye contact — even if the camera isn’t aligned with the user’s screen.

- Participants can choose their own animated avatars which will move around automatically and realistically by following their voice and emotional tone in real time.

- The platform lets you add virtual assistants that use state-of-the-art AI language models to take notes, set action items and answer questions in human-like voices; they also provide other conversational AI services such as translations, closed captioning and transcriptions.

Demand for videoconferencing at any given time can be hard to predict, with hundreds or even thousands of users potentially trying to join the same call. Maxine can scale its AI capabilities to hundreds of thousands of users by running AI inference workloads on NVIDIA GPUs in the cloud.

“Phygital” experiences will now grow faster in the IT world — and then the AV industry will be forced to follow. But wouldn’t it be easier if we paid more attention to Nvidia in the first place?