Movie Time for Meta

Meta moves to first place in video-to-text imaging with the launch of Movie Gen for AI-enabled content creators

We’ve all been impressed by the very first text-to-video AI, with how AI can now use simple text inputs to transform our imaginations into a unique video. Now Meta’s Movie Gen moves the needle forward, outperforming the benchmarks of previous state-of-the-art models and commercial systems.

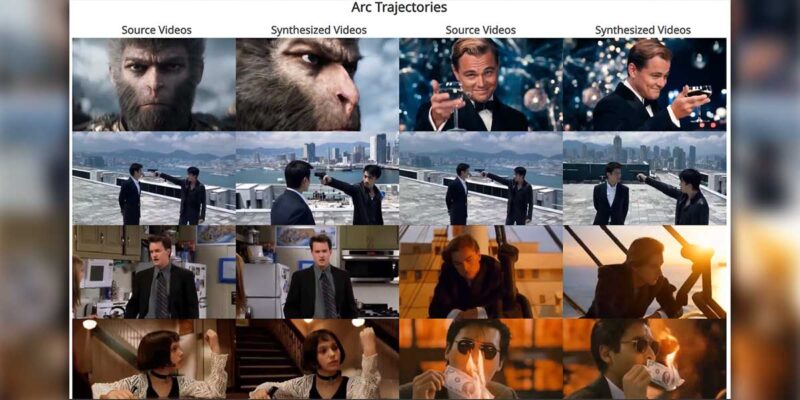

One look at the examples demonstrates this.

Text-to-video is race run by tech giants and AI pioneers. Movie Gen pushes Meta forward not just by a nose but by several lengths — and the result we can expect will be even more competitive advances in text-to-video. Movie Gen will not be available for open developer use but is intended for collaboration with the entertainment industry and content creators. It appears purpose-built to quickly create video ads for Facebook and Instagram.

This new tool lowers production costs — and reduces workflow timelines (and a reduction in the number of people who will have to be involved). Movie Gen joins other AI text-to-video generation tools such as OpenAI’s Sora, Google’s Lumiere, Runway Gen-2, Stability AI’s Stable Video Diffusion, Pika Labs and Nvidia’s Vid2Vid.

Yes, all current text-to-video AI models — including Movie Gen — have limitations but the race (to decrease “creation” time and improve the ability to scale up) is still only at the starting gate. Meta Movie Gen is a set of foundation models for generating high-quality 1080p HD videos with synchronized audio, personalized characters and precise video editing capabilities — and required Meta to push forward multiple tech innovations in architecture, training objectives, data recipes, evaluation protocols and inference optimizations.

The models excel in multiple tasks including text-to-video synthesis, video personalization, video editing, video-to-audio generation and text-to-audio generation.

Going Personal

Movie Gen models offer capabilities such as precise instruction-based video editing and the generation of personalized videos based on a user’s image. You can start with an image of yourself (or another) and then create a video around the image as a centerpiece.

The Personalized Text-to-Video (PT2V) model architecture extends the 30B parameter Movie Gen Video model (30 billion tiny details or parameters) by incorporating identity information from a reference image (to maintain the identity of the person in the reference image while generating videos that follow the text prompt).

A vision encoder extracts identity features from the reference image, which are then used alongside the text prompt to generate personalized videos.

The exciting part of AI is how fast the technology: as we wait to see who will take the next lap of the race. In less than six months, we’ll see another iteration that pushes forward.

As the initial impact will revolutionize social media reels, digital signage clips, product videos and other short-form video, the long form videos are still in the distance. AI itself may move faster but long-form video requires additional technical development for infrastructure and support (just as Meta explains such tech creation was necessary to accomplish their short Movie Gen versions).

But now it’s clear: within five years, you’ll have commercially available (and probably affordable via cloud) text-to-video that can generate an hour or more of quality video. In ten years, it may be that filmmaking will move from Hollywood to AI-land.

- Technical details: https://ai.meta.com/static-resource/movie-gen-research-paper

- Examples: https://go.fb.me/MovieGenResearchVideos