I’m Bringin’ Codecs Back! What?

If there has been any trend in AV that is glaringly apparent over the last few years, it is that the amount of hard codecs being sold for video conferencing is declining. Although videoconferencing is being more widely adopted, the hardware sales dollars are decreasing, in a huge extent because the codec has gone to software, the cloud, or been virtualized somewhere and being shared by many companies as needs arise.

I even wrote a blog in 2014 about the phenomena asking if Polycom and Lifesize would be the next Blockbuster.

The hard codec essentially became a casualty as efficiencies in virtualization and improvements in internet bandwidth came full circle. Companies decided they didn’t want to own and manage all that infrastructure, and that the new soft codec based options had become “good enough”.

Sure there are always holdouts. Those companies with such a huge sunk cost that they just continue forward or those that really do have quality and security needs that make them slightly uncomfortable with a solution hosted elsewhere. However, those situations are becoming fewer and farther between.

Good enough could be achieved at 1/4 the cost of great, so the march of commerce and convenience again trumped quality.

So why on earth, with all of these events in motion and well beyond the point of no return, would I declare to now be bringing codecs back? Well, if the mantra of real estate is “Location, location, location!”, then the mantra of AV should be “Application, application, application!” And the application at hand here (pun intended) is…

Haptics. Specifically the Internet of Touch or telemanipulation.

I was at SIGGRAPH this year and I ran into the control room guys from Christie. They partnered with TechViz to create an amazing 3D projection demo where you wear 3D glasses and look at the layout of a virtual piece of machinery. You reach out and grab their haptic joystick, and then manipulate it to reach inside the piece of machinery to find a bolt. Here is the really tricky part. The haptic joystick is connected to a resistance arm. If you try to press it forward when you are up against the side of the virtual piece of machinery in front of you, it doesn’t move. You have to move your tool until you are lined up with the opening, and once you are, the tool will move forward in 3D space again. It’s like a giant, incredibly complex game of Operation for engineers.

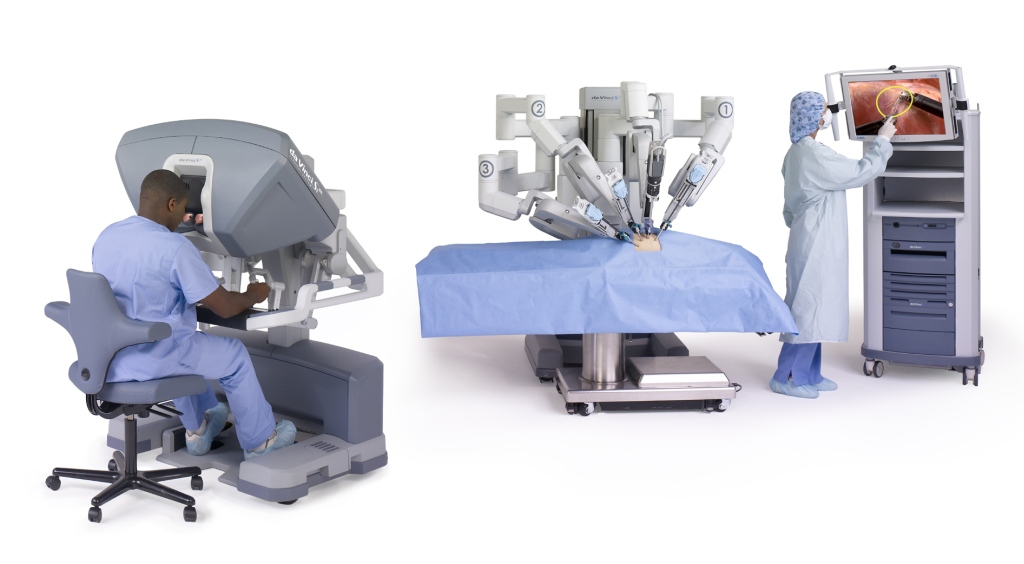

Now if you’re thinking, “Wow Mark! That’s cool but completely irrelevant to anything but gaming”, you’re wrong. Start to think of the applications for telemanipulation. Remote control of robotics in remote areas, manipulation of tools for remote service, control of NASA’s Robonaut, or of course even telesurgery.

An article about the last item, telesurgery, actually inspired me to write this. Think about the ramifications of anything but the highest level of real time audio visual and control of something like a surgical instrument and then ask yourself if you’d trust this type of communication to anything but a high-end on premise system with built in redundancy.

Your video communication drops for 2 seconds right when you are cauterizing an artery during microsurgey and a patient bleeds out on the table. Your haptic controlled robot lags a few extra milliseconds and the command to stop moving forward is delayed, creating a laser incision in the lung during heart surgery.

Oops!

Now consider that the engineering and service examples could be just as critical to life safety as well. It all adds up to the codec being an extremely relevant piece of hardware in a situation where haptics are involved. One that may even have greater implications for new encoding and decoding strategies as well, given that the existing protocols were meant for voice and video, not touch.

If you are in the integration business and want to leverage your hard codec experience in a market that has virtually disappeared, I suggest you look toward specialty applications across verticals where the hard codec may have just found its new home.

The hard codec isn’t dead after all. It has just moved from the conference room to the control room or the operating room.

So go ahead, get your codec on. We’re bringin’ codecs back.