The Sound of Sound

In a world of steadily increasing digitization, robotization and electronic origination of everything from voicemail prompts to virtual customer support, the musical world has not been left out of the progression to artificial sources. Of course there remains the world of orchestral, operatic and some styles of folk/country music performances, which are as unrepentantly “live” acoustic events as they ever were. But even those supposedly sacred spaces have been quietly infiltrated by recorded tracks, electronic sources, synthesized music support and all kinds of “augmentation.” The HOW music world is no different in this respect than any other style or format.

In a world of steadily increasing digitization, robotization and electronic origination of everything from voicemail prompts to virtual customer support, the musical world has not been left out of the progression to artificial sources. Of course there remains the world of orchestral, operatic and some styles of folk/country music performances, which are as unrepentantly “live” acoustic events as they ever were. But even those supposedly sacred spaces have been quietly infiltrated by recorded tracks, electronic sources, synthesized music support and all kinds of “augmentation.” The HOW music world is no different in this respect than any other style or format.

Perception Is Necessary

What this trend towards artificial sources has created is a lack of cognition of what things actually sound like and how natural, acoustic sources create a “space” or congregate to become a performance as perceived by the listener. Without exposure to non-reinforced natural acoustic sources, it is extremely difficult to have any kind of mental image of what people, instruments and most importantly multiple individual instruments or groups of anything actually sound like. Without that mental “file,” the tendency is to assume that every source needs to be processed or helped in some way in order to sound right.

Certainly a portion of this predicament has been created by the world of sampling and the stunning growth of digital keyboard and synthesizer capabilities. Literally anything that produces sound can be sampled and reused whenever and wherever it is desired. There is no need to find someone who knows how to play, use or operate that device, no need rehearse, no need to deal with actual people and their numerous “issues,” and most importantly for the professional musicians, no need to pay them. Punching up that cembalo dulcimer or Irish harp sample on a digital keyboard is much faster and easier than getting a person to play one, let alone finding one.

The problem is that however accurate the sample might be (and that varies dramatically depending on the sampling device/method and from prerecorded loop to prerecorded loop), it’s still just a sample — a minuscule, frozen moment of some portion of the acoustic output of the particular source. It is most certainly not a high-definition picture of the whole of the source, especially not of that source as heard live with all of its complex harmonics and ear/brain/room interactions.

Do not forget that any “sample” is really only a spaced series of ‘stills’ of the loudness of the continuously variable analog input signal. If it helps, think of them as frames in a movie, so many per second, each of which is then saved as a binary value (zero or one) in some kind of computer style memory. Then, when you play the sample, those stored loudness values are played back (hopefully) in the correct order, reconstituted by a chip called a DAC (Digital to Analog Converter) as a variable waveform, and what we hear is an pseudo-accurate replica of the sound that was originally ‘sampled.’

How high the sample rate was/is or what kHz it was archived at or how slick the microphones were or any of that are all irrelevant. The actual sample library size needed to truly recreate any natural source beyond the ability of the human ear to discern the difference is so huge as to be outside the realm of feasibility for all practical purposes. Yes, I’m sure you could stack up enough storage devices to accomplish the task (terabytes, at least), but that would be for just one specific guitar and specific player, for example. If you change just one string’s tuning even a semi-tone, you have to start over.

More prosaically, especially in the world of HOW music, take the case of the legendary Hammond B3 Organ and Leslie Speaker combination. If you move just one tone bar 1/4”, it’s a wholly different set of parameters, and there are lots of tone bars and lots of possibilities (pardon me while I fire up the Cray supercomputer to calculate the possibilities — we’ll get back to you with the answer in a couple of months).

Now throw into the equation the variables associated with the rotating speaker in the Leslie cabinet and the absolutely humongous number of harmonics and overtones that adds to the “sound” of the organ. Oh, and by the way, how loud the speaker is playing also builds in another googol (a googol is the large number 10100) of dataset variables, but you should get the point by now. (Let me add in another two or three NSA grade super computers to the calculation process to get that answer in a year or so.)

That doesn’t mean you can’t fool the ear into thinking it heard the real thing, sort of, just like you can use multichannel time differences to create localization effects or perceptive positioning of a source. But it’s not the real thing; it’s just close enough that you can get away with it… sometimes.

There’s an old remark supposedly attributed to Sir George Martin (the celebrated Beatles producer/engineer) that goes, “If you want real strings, hire real string players.” The other well-used phrase is that you only get points for being close in horseshoes, hand grenades and nuclear weapons.

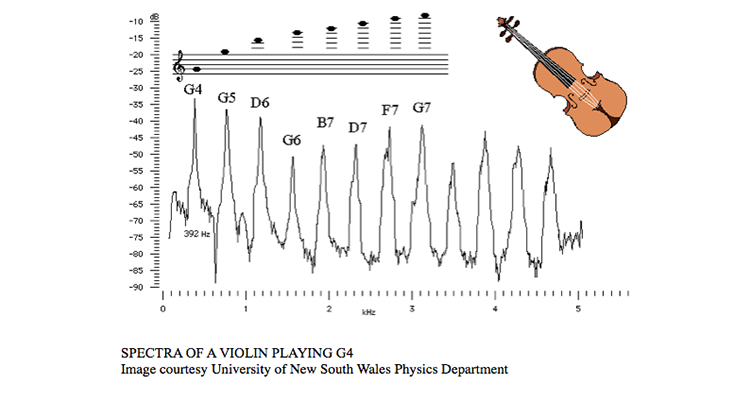

If for some reason, you believe that by now, in the 21st. century we should be able to do this perfectly, think again. Here is a spectral graph of two ‘samples’ of a violin and the other of a flute. Both are playing the same note G4. G has a frequency of 392 Hz and the harmonics are all multiples of this fundamental frequency (or about 800 Hz, 1200 Hz, 1600 Hz, etc.).

It is immediately obvious that although they are both playing the same note, the frequency spectrum of their G4 content is radically different, which is why we know when we hear the actual instruments themselves making this note that one is a flute and the other is a violin.

(For an excellent reference to the actual frequency spectrum of any musical instrument see, go here. This site allows you to look up a huge number of instruments and see the frequency rage they produce and the bandwidth they cover.)

Why Does This Matter?

Why does all this matter to the HOW readership and especially those tasked with or who have volunteered for sound reinforcement duties? It matters because the people who attend the service, no matter the denomination or style of worship, will use their ears to determine the success or failure of our efforts. Sure, they might not know you’re using a sample, or how much you’re processing the signal, but they do know if it sounds real and right to their ears. Even if they have never actually heard the specific instrument up close they have a mental ‘picture’ of what it is supposed to sound like- and that’s all that matters- both to you and to them.

So before we get to the rules of the road so to speak, there is one thing that every “sound” person should do, no matter what level of experience you have, and that is LISTEN to the real thing! This means, quite simply: Spend the time to carefully listen to what the instrument or voice sounds like, in its natural, un-processed, un-reinforced, un-sampled, un-digitized state.

Go to a rehearsal or practice session or a music school, or store that provides lessons, or anyplace you can actually hear an acoustic guitar or any other instrument and just listen. Build up that mental file picture of what the instrument sounds like to your ear so that when you have to reinforce it you will know whether the microphone or pickup is giving you something that actually resembles the real un-adulterated sound of that particular source.

Equally important is to listen to the musicians in your worship space without any technology between your ear and their sound. You need to know what they are hearing, before you can create a presentation of that sound to the congregants.

Thus, it should be the golden rule in worship sound reinforcement (or any reinforcement for that matter) that any sound reproduction attempt should be focused on producing a convincing and realistic presentation of what is happening ‘on stage’ or wherever the live source(s) are.

This means recognizing some essential rules that more often seem to be forgotten if not ignored in current practice. These rules are like the famous t-shirt showing Einstein’s face that says “186,000 miles per hour is not just a good idea, it’s the law.”

Unlike the absolute rules of physics (at least non-quantum physics), these rules are like the edge barriers on freeways — stay in lane and you’re fine, drift too far and…

Rule Number 1 — Not everything in a mix needs to be heard all the time. Music is a dynamic entity and it flows — instruments move in and out of focus. Trying to make everything audible leads to mush. Sure, the worship leader, pastor, minister, priest or whom ever is the primary speaker MUST be heard, just like the lead vocal, but even the head honcho has to take a breath now and then.

Rule Number 2 — Unless you’re Phil Spector, a wall of sound is a bad idea. Music needs space around both the total program and each individual part. Think in 3D, not 2D. Think vertically and horizontally. Way, way, way back in the early 1970s when I was just a newbie engineer, one of the studio’s old dogs wandered in about 1 a.m. when I was working on “my stuff.” He just stood there for a while and then when the 16-track stopped he said, “Nice sounds, but you have no space between the speakers.”

I just looked at him with a deer-in-the-headlights expression, and he said, “Here, I’ll show you,” and proceeded over about 10 minutes to redo the mix so the rhythm section sat stacked up in the middle and the other instruments were spread out left to right and top to bottom, making nice holes for vocals and solos. You could even hear the cool reverb effects I had spent so much time on.

Rule Number 3 — If it’s too loud, it’s too loud. Proving you can reach the physical performance limits of the sound system proves nothing except you have the wrong focus. Loudness is no substitute for quality or esthetics and is not a requirement.

Just because you have a system that can produce 100dB+ does not mean you have to add the entire audience to the rolls of the hearing impaired. Besides, as level goes up the ability to discriminate among the various parts of any performance goes down. Your ability to discern subtleties vanishes into the dBs.

Rule Number 4 — Mixing is a subtractive art, not a game of how many faders can you push to the stops. 99 44/100 percent of the time, less is more. Watch the really good live sound engineers. They don’t just jam everything up and try to make sense of the subsequent cacophony. They blend, scale, assess and control the sound. In fact years ago the job used to be referred to as a “balance engineer.” It’s still is a game of balance!

Rule Number 5 — If it doesn’t make sense, it won’t sound good. Think before reaching for that knob or throwing technology at something. Just because you can do something doesn’t mean you should. Having a digital mixer with copious amounts of signal processing does not imply that every effect has to be applied to every instrument and voice on every performance. The gear won’t spoil if you don’t use it. Before you insert that EQ, compressor, etc., decide why you’re doing it and what your goal is.

Rule Number 6 — It’s still ultimately all about the service and the word being delivered to the parishioners, not your technical genius. Showing how cool you can be only shows how little you understand why you’re there.

I like to think about this whole process using another infamous slogan seen on 1970s’ bumper stickers in the northeast: “E=MC2±2dB.” The rules are there, but this is live HOW sound and things need some maneuvering room to function. Be smart and be flexible, and the performance will always sound better.