The Real Enemy of 4k: Infrastructure

There has been a lot of talk about 4k. There are actually several formats that people are describing when they use the term 4k. There is true 4k from cinema (4096 by XXXX) and then UHD-1/2160P (3840 by 2160) which is what type of displays are hitting the market. For the purposes of this blog, I am using the term 4k/UHD to refer to all of these formats, even though in principal they are not all the same.

There has been a lot of talk about 4k. There are actually several formats that people are describing when they use the term 4k. There is true 4k from cinema (4096 by XXXX) and then UHD-1/2160P (3840 by 2160) which is what type of displays are hitting the market. For the purposes of this blog, I am using the term 4k/UHD to refer to all of these formats, even though in principal they are not all the same.

Last year I wrote a blog last year on the subject and the differences in the formats as well, describing 8 ways in which 4k/UHD may have some trouble. Since that time 4k/UHD screens have started to hit the market. Netflix announced some 4k/UHD content would be coming in the popular series House of Cards, and many people have made their cases for and against 4k/UHD.

The best cases I have heard for 4k/UHD are typically for custom created content on large format displays, or for quad HD/1080p windows being open on one display. I can’t argue that a 4k/UHD display would not be advantageous or desirable in those scenarios. There have also been figures released that there will be 4 million 4k/UHD TVs shipping by 2017, so despite arguments that this technology may fizzle like 3D did (I predicted 3D would die out in 2010) I don’t believe 4k/UHD has the same fate.

So the enemy of 4k/UHD has most commonly been identified as content. I agree in some respects, but existing 1080p content can be scaled to 2160p fairly easy, it is a round number after all, so on board scalers should have a much easier time doing the math than they did when we asked them to take 480 up to 720 or 1080. Cinema content will be problematic in some ways as they film in 4096, so their 4096 pixel wide images will be letter boxed or chopped again to play on new 3840 pixel wide displays. We’re used to that though, especially if the films are in anamorphic wide screen instead of 16:9 HDTV ratio format.

New content will also start to be mastered in compatible formats, so over time the content issue may solve itself. Here is one thing that won’t change soon however: infrastructure.

And that my friends is the real enemy of 4k/UHD. It is the enemy in a variety of ways and causes problems in HDMI, video extension/distribution, broadcast, storage, and streaming.

As a precursor to this whole conversation, you have to understand that there is more to a video signals bandwidth than number of pixels. You also have to figure in refresh rate and color sampling rates as well. 4k/UHD screens need a larger color space than the old 1080p screens to assure smooth transmissions in colors and make the images look good. You also need more luminance from the screens to bring these colors out, and with more luminance you need higher refresh rates to reduce potential image flicker. (See the Future of Ultra HD and the SMPTE UHD committee report.) These things will all be important later.

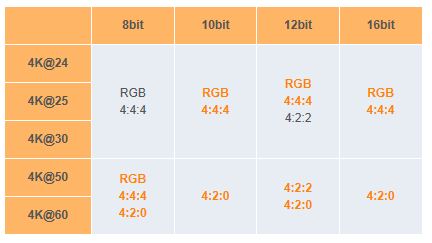

HDMI- Even if we have 4k/UHD screens and 4k/UHD native signals we have to get the signals to the display in one way or another. In our ProAV world that means HDMI. Many seem optimistic that the new HDMI 2.0 spec is all we need to cure these ills. I can’t blame them based on some of the bravado around the spec. If you go to Wikipedia and look up HDMI 2.0 you’ll find this chart.  The chart says that HDMI 2.0 supports 4k resolution, that’s great! It says it supports 4k at 60fps, even better! And it says it supports the full RGB 4:4:4 color space,amazing! I can hear you now, “Mark, what’s your problem? HDMI 2.0 supports 4k resolution at a high frame rate and at a high color sampling rate.” Well now take a look at this chart from HDMI.org.

The chart says that HDMI 2.0 supports 4k resolution, that’s great! It says it supports 4k at 60fps, even better! And it says it supports the full RGB 4:4:4 color space,amazing! I can hear you now, “Mark, what’s your problem? HDMI 2.0 supports 4k resolution at a high frame rate and at a high color sampling rate.” Well now take a look at this chart from HDMI.org.  Notice anything?

Notice anything?

This chart for one includes bit depth, which was noticeably absent in the other chart, although if they had included it, I’m sure it would have just said the spec supported 8,10,12, and 16 bit color. The main thing though is it actually gives you a matrix of what is supported simultaneously.

That kinda matters.

So does HDMI 2.0 currently support high bit-depth, high color sampling rate, high frame rate video at 4k/UHD? Nope! You are going to trade frame rate for color depth and quality.

This is also the final nail in 3D’s coffin, as the argument was you could do passive 3D at 1080p per eye by using interlaced left and right eye signals. If you didn’t like 3D at 60 fps I can wager you’re really going to hate it at half that speed.

Video Extension/Distribution- So even if you could get what you wanted for a great 4k signal from the source device to the display via HDMI 2.0, (which you can’t), you then have situations where you need to go farther than the 15 feet the cable gives you or go through some type of distribution amplifier (DA) to get that signal to the display(s) you want.

Let me make things easier by assuming the video extenders, HDBaseT or Video over IP, and the DA whether it be an HDBaseT switch or an enterprise level network switch, are capable of the bandwidth required. (That may be stretch as well, but we’ll concede it here.)

The problem is that the existing CatX based infrastructure probably isn’t capable of passing it.

I’ll point you to a cable challenge done by the RedBand/AV Nation folks at InfoComm14. The long and short of it is, the error rate for 4k needs to be less than 1 per billion to have good video. Two manufacturers had 7-12 times that error rate. Crestron’s new “4k” cable actually tested between 1 and 2 parts per billion on its three attempts (somehow the agreeable specs seemed to stretch to 2 parts per billion during Crestron’s part of the test but they were a sponsor of the test so I’m sure that had nothing at all to do with it), and then Kramer’s cable came in at 0.3 parts per billion.

The point is, out of 4 types tested, only 1 really passed, and even the “4k” from Crestron didn’t get below 1 part per billion. The only cable that passed seemed to be Kramer’s, and who do you know pulling Kramer cable? Looks like we should be in the future though!

If you watch the screen shots of the test meter on the video, you may notice one other thing as well. The test was 3840 x 2160p at…30fps. I have a feeling if you double that frame rate, the errors will increase as well.

So if you plan to use the existing CatX based video distribution or network cabling for HDBaseT or video over IP at 4k/UHD, you may need to do some onsite testing first. You may very well need a whole new backbone and the CatX cavles needed most likely won’t come cheap. This will diminish the ROI of upgrading from or early adoption of 4k/UHD and may keep these systems pushing 1080p well into the future.

Broadcast- There are quite a few that put forward the idea that wide consumer acceptance of a technology translates into faster commercial adoption. A great number of the 4 million sets expected to be shipping by 2017 will be consumer displays.

Given these two facts, we can infer that if the consumer 4k/UHD experience isn’t spectacular, then it may slow the ProAV adoption.

I referenced a document put out by SMPTE above and that document specifically looks at the challenges of adopting and distributing UHD-1 (and later UHD-2 or 8k). The SMPTE report is 42 pages, so let me boil down a couple issues with broadcasting 4k/UHD.

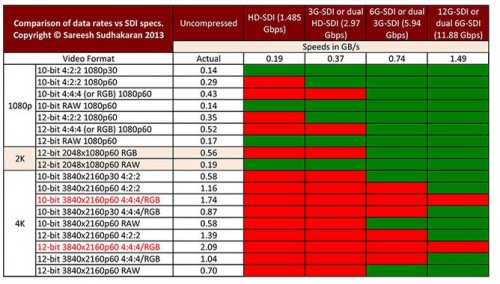

The first one is again infrastructure. In the broadcast world they are not saddled with the HDMI standard, so the issues are different. In this space they use the serial digital interface or SDI standard. It utilizes coaxial style cables so it does not exhibit the finicky electrical qualities that cause HDMI cable to behave so inconsistently, but they still have bandwidth issues. For SDI bandwidths, we look at the chart below.  You can see that unlike HDMI, broadcast isn’t trying to fool us by including 8 bit color depth specifications. However you’ll notice that even the 12G SDI/dual 6G SDI won’t carry the 10 or 12 bit 4:4:4 streams at 60 fps either. Now take into account that most TV stations have even lesser infrastructure than that, and we have an issue.

You can see that unlike HDMI, broadcast isn’t trying to fool us by including 8 bit color depth specifications. However you’ll notice that even the 12G SDI/dual 6G SDI won’t carry the 10 or 12 bit 4:4:4 streams at 60 fps either. Now take into account that most TV stations have even lesser infrastructure than that, and we have an issue.

SDI equipment is NOT cheap. Think about how long it took for all channels just to go to the digital standard, and how many more still aren’t in regular HD, and you may get some sense of the scope here.

There is a bottleneck at the station level, and SMPTE’s committee is actively seeking ways to compress 4k/UHD down enough to squeeze it through these pipes. How do you think that will affect the quality and the experience on the other end? It’s great to have more resolution, until you realize that they flattened out the video signal and gave you globular masses of colors that dance the compression tango.

The second issue is that broadcast has to create a standard that addresses both 1080p and 4k/UHD and these two formats utilize different color spaces. SMPTE has some real concerns about color translation, as the conversion of the UHD color space down to the HD color space can cause the resulting image to actually look worse. It is equally hard to take the existing HD content with it’s smaller color space and expand it for 4k/UHD.

I’m unfortunately not ISF certified so I only understand gamut conversion on a rudimentary level, but if you’re so inclined and want a deeper explanation you can read pages 29 and 30 of that report. My point is that if they’re worried about it…we should be too!

The long and short is that 4k/UHD TVs playing broadcast content will most likely end up looking worse than a 1080p set playing native 1080i content, and that is a problem. Even native 4k content will be squashed by the time it gets to the set.

Storage- In his piece “4k is Here!!! Now What?“, Josh Srago says that he sees the “re-emergence of the home media server as our best option” for managing 4k/UHD content.

I agree with Srago in that Blu-Ray, although it has the storage capacity, was not set up to deliver 10 or 12 bit content. To quote another set of HD experts when discussing the possibility of normal 1080p content being stored in deep color (10 bit) on a Blu-Ray disc-

“Neither HD DVD nor BD have ANYTHING in the specs to allow Deep Color encoding so they cannot benefit from this tech. When a device (either displays or players) says it supports Deep Color, all it is saying is that the HDMI connection supports it.”

Srago loses me in his argument later however in that he goes on to relate that-

“With the return of the media server and the current sizes of inexpensive digital storage the only issue that comes up is the amount of data that you’re going to consume while downloading these movies. By using a local storage device you are able to avoid the issues involved in network bandwidth, you will be able to ensure that the local signal between player and display will not have bandwidth issues as you can avoid sending true 4K signals through a single HDMI or Display Port connection, and you have nearly unlimited storage capacity with the available options in drive space.”

Did I just hear the four letters HDMI in there?

It seems Srago was aware of the difficulties in network bandwidth that may be inherent without a direct connection from the media server to the display, but has lost me in that he thinks HDMI will not cause the exact same issue at the last mile. Storage of 4k is a problem, at least in high frame rate, high bit depth, high sampling rate, 4k/UHD.

You can store it in a server wonderfully, but what good does that do you if you can’t get it out?

Bandwidth- I’ll again give a nod to Josh Srago’s article above where Srago asserts that-

“the limitations in our existing network infrastructure, while streaming media is dominating how we view and receive our content, it isn’t yet consistent enough for widespread reception of 4K in our homes.”

This is the same sentiment Brian David Johnson makes in Screen Future about the future of TV. Bandwidth in the existing TV, Satellite, and Cellular networks is not up to the level it needs to be. These networks were not built with the intent of delivering so much data to so many screens. There will be a point where data prices will start to rise dramatically to start to pay for upgrades to these networks.

So when this happens, there is also another concern and that is the relationship between the company that owns the cloud based content to be streamed and the ISP. Josh Srago has written a ton about that as well and the issue of Net Neutrality, but that is a whole other subject.

My conclusion here is that even if 4k content comes quickly, which is yet to be seen, we are not ready for it from an infrastructure perspective. We don’t have the bandwidth for mass adoption of streaming content. We don’t have a medium to store it on, and even if we get it into a server we can’t get it to our displays due to issues with HDMI. We can’t extend it over existing CatX based infrastructure. Broadcast can’t distribute the content without compressing it down, and has some potential issues with converting content between formats easily.

The enemy of 4k/UHD right now is infrastructure, and that is not easily addressed. It will be a long, slow, and tedious process that may just stifle the sales of 4k/UHD displays as the drama unfolds.

As we forge ahead, lets educate our customers on the advantages and disadvantages and help them in maximizing their ROI while installing new infrastructure suitable for future needs and upgrades.