Too Many Captain Kirks, Not Enough Scottys

For well more than a century, the audio world was warm, straightforward and essentially analog at its core. It was a copper-based universe of wire, resistors, capacitors, transformers, vacuum tubes, transistors and electronic parts you could touch, feel, see and identify easily.

And then, as things are wont to do, it all changed. Individual circuit components combined and merged onto rectangles of silicon substrate and became ‘integrated circuits,’ and ‘chips’ and surface-mount, micro-miniature resistors and other circuit elements became tiny little dots on multi-layer complex circuit boards, unknowable and un-repairable.

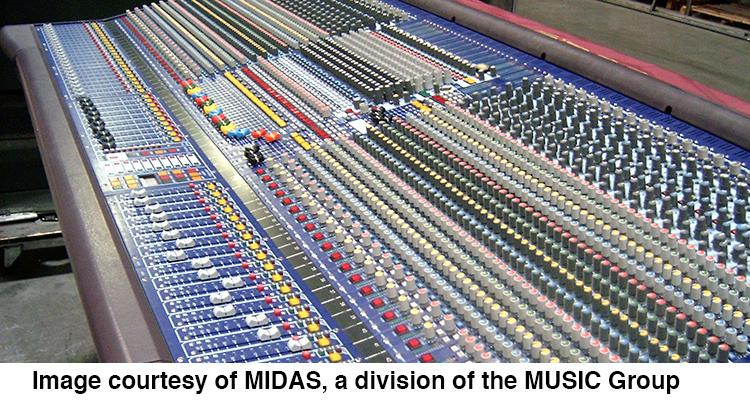

Let’s look at two very well known mixing consoles as examples of this evolution.

First we have one of the classics of the analog age, the MIDAS Heritage series. Every knob, switch, button, fader and control has a specific known function and resultant action.

Its evolutionary successor is the MIDAS pro X CC series of DIGITAL mixing consoles, wherein menus, multi-purpose control surfaces, assignable control and all the other advancements of the new generation are in full flourish.

Its evolutionary successor is the MIDAS pro X CC series of DIGITAL mixing consoles, wherein menus, multi-purpose control surfaces, assignable control and all the other advancements of the new generation are in full flourish.

If you just arrived on this planet from another space time continuum, which device would seem more obvious in its functionality and operation — wait, think about it dispassionately.

In this new, all-digital, all-the-time world we now can be greeted by an on-screen message like this:

Because as everything is, at its core, some form of computer, everything is also based on software. Once upon a time, audio was knowable; pathways, gain structure, processing systems and devices and all the rest were at their worst obvious functional blocks. Now we have a code-based world and hardware today runs on software and software requires fairly constant updating for minor issues.

It’s not a choice anymore whether to update or upgrade hardware, because the software that runs it WILL require updates or in some cases wholesale platform shifts or complete revamping of the foundational operating system (OS), whether you want to or not.

Think about it for a few thousand milliseconds (milliseconds are centuries in software land after all); let’s look at the world’s most ubiquitous operating system as an example. In just slightly over three decades we have gone from Windows 1 to Windows 10, but wait! There were so many, many, many steps in between (click the image to see the full resolution table):

This massive list does not even consider the CPU architecture changes required in many cases to even run the OS in question. Every one of these imposed and often un-wanted “updates” supposedly provided improvements, but as it rapidly became more and more complex, this software often became unstable and incompatible with other devices and also caused the long, long list of problems now all-too-familiar to anyone using it. How many hours have you or your company spent transitioning from one version to another? And then add in the multiples of that time you spent getting your clients’ systems to live in the OS’s brave new world. I’ll bet it cam be measured in man-years at a minimum.

Or as the often too realistic Dilbert comic strip so succinctly put it:

It frequently seems as if the folks in software land are using a developmental methodology that often approaches prayer, and products get released, knowingly, that have this idea at their center:

How many times has an algorithm or piece of easily hackable code brought a whole system down because it was badly written, poorly tested (if it was tested at all) and never checked to see if it caused more problems than it fixed.

Part of the problem here is that there are too many people at the top of the organizations responsible for our software based digital world, not enough people actually doing the hard work of making sure the stuff actually works. Scott Adams often skewers this in his Dilbert strips, but the reality is he’s not just being clever or funny: He’s just pointing out a serious and growing problem.

And I haven’t even started on the stuff living on top of the OS. (By the way, although many acolytes would dispute this, the once safe and sound world of Apple OS is no longer immune to these issues, witnessed by the many issues that have arisen in the last few releases and the hacks that followed. And please don’t barrage me with Linux ‘miracles’ — that’s not the point here.)

THE DSP CONUNDRUM

What is the point of this cautionary saga, you might ask? It’s this: Every “block” in a current generation sound system or video system is packed to the gills with DSP capabilities, often totally proprietary, running on customized code and software, isolated from any other block by that design choice. Yet we as integrators designers, consultants and users are supposed to find a way to make all these separate little feudal kingdoms of high-powered silicon work harmoniously and function seamlessly essentially on our own?

Leaving out of this debate the numerous and often complex network backbone issues and digital audio transport complexities (which require their own discussions), we are still faced with a very steep hierarchy mountain to climb.

Let’s look at a typical sound reinforcement system component map. Working backwards from the end point loudspeakers we have:

- Loudspeaker DSP (on board or controller based)

- Amplifier DSP (external or on-board the loudspeakers)

- Network Distribution (with DSP)

- Audio Management/Transport (with DSP)

- Mixing console (with DSP per channel and master/matrix/buss)

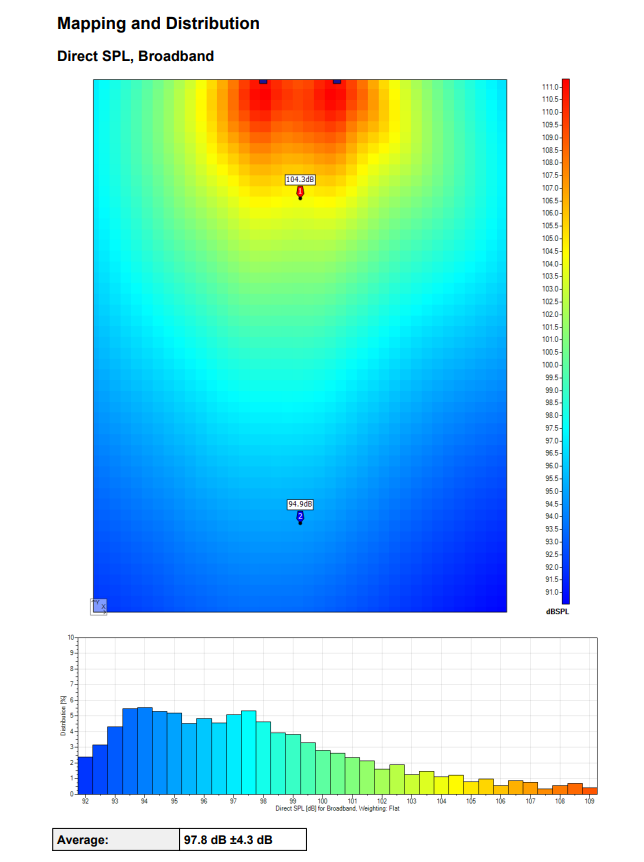

At a minimum in most cases, we have at least five DSP blocks and theoretically perhaps even twice that depending on the hardware chosen and its functionality. In the networked sound system world, each of these ‘blocks’ may have an IP address (or multiple IP addresses) and a large number of I/O paths, which also might have some level of DSP built in.

Structurally, which block is in charge and which are following? It might seem obvious, but from the multiple DSP’s perspectives, it’s not — because any or all of these fully-functional, highly-capable processing points is potentially capable of being either a Captain Kirk or a Scotty.

If this ‘who’s in charge issue?’ is not designed in and resolved before anything is installed or configured, you are creating a witches’ brew of bubbling conflict and operational disaster.

If you and your clients/users/operators don’t know where a particular task is being implemented or how it interfaces with the many other things going on simultaneously, it can and frankly WILL create a substantial functional choke point and significant confusion. Is the System EQ being done in the loudspeaker processing EQ, the amplifier EQ or somewhere else? Is it accessible or locked down, password-protected and safe? Is the routing and distribution matrix handling conflict resolution on the network, or is that being done by some other device (a switch for example)?

And… what about the accumulating pass-through delay from each successive layer of DSP and A to D or D to A? Are you aware of how many D/A and A/D conversions are taking place? This creates an incredtible time lag, not to mention the potential signal degradation and data loss that can occur at each step. Oh, and by the way, are all of these steps happening at the same bit rate, word length and bit depth? Do you know? Does anyone know? Has anyone mapped this out? In advance?

Is this beginning to look like a minefield to which you do not have a map? It should, because it is. If all the math and calculations on delay are not factored into the planning and design of a system, you can very quickly find yourself with a substantial delay problem, enough to make it a problem for anyone, professional or not, who has to use the sound system. And this kind of problem is subtle, often hard to quickly pin down and even harder to fix once the system architecture is in place and connected.

Do you know how to determine what device is producing how much signal delay and how to minimize it? Don’t forget, most DSP-based products have a baseline signal delay even if they are not actively processing audio. Unless you have hard-wired full signal bypasses built in around those devices’ DSP sections, you are accumulating delay whether you recognize it or not. Just setting a DSP section or function to bypass does not automatically guarantee that the signal is not passing from beginning to end of that particular block and thus gathering delay as it moves on through.

Sure, all that signal processing power is a major adrenaline hit and can be used very effectively to do magical things. But it all comes with potentially negative side effects (that are often not very visible or audible) — problems that arise on top of the desired results.

So a word of caution is in order. Be sure you know IN ADVANCE how the system architecture will affect the audio or video signal as it makes its way from input to output, multiple times. Be aware of often not-well-documented time/delay accumulations when multiple DSPs are in the system. Be sure you have disabled or bypassed in a solid and difficult-to-change manner any functionality that you don’t want or don’t need. It is inevitable that with many DSPs at play, overlap and duplicate capability is present. It is essential to ensure you have taken this into consideration and carefully and logically decided, recorded, mapped out and documented in writing who is Captain Kirk and who is Scotty.

Engage!